Pruna AI Launches Open Source Optimization Framework for AI Models

Pruna AI, a startup based in Europe, is set to unveil its optimization framework for AI models as open source on Thursday. The framework integrates various efficiency techniques that enhance AI model performance, including caching, pruning, quantization, and distillation.

Overview of Pruna AI’s Framework

The framework developed by Pruna AI allows users to apply multiple compression methods effectively. According to John Rachwan, co-founder and CTO, the framework not only standardizes the processes for saving and loading compressed models but also evaluates potential quality losses after compression. “We also standardize saving and loading the compressed models,” Rachwan commented in an interview with TechCrunch.

Efficiency Evaluation

One of the standout features of the Pruna AI framework is its ability to assess the quality of compressed models and determine the performance benefits gained. Rachwan compared the company’s mission to that of Hugging Face, noting, “If I were to use a metaphor, we are similar to how Hugging Face standardized transformers and diffusers.”

The Importance of Compression Techniques

Established AI labs, such as OpenAI, have long utilized various compression methods to enhance their models. For example, the development of GPT-4 Turbo, a faster variant of GPT-4, was achieved through distillation techniques. Similarly, the Flux.1-schnell model represents a distilled version of its predecessor, showcasing the effectiveness of these approaches.

Addressing the Market Needs

While numerous large organizations often create these compression methods in-house, Rachwan highlighted a gap in the availability of cohesive tools in the open-source ecosystem. “For big companies, what they usually do is that they build this stuff in-house,” he remarked. He noted that Pruna AI’s offering stands out by aggregating various methods in an easy-to-use manner.

Focus on Various AI Models

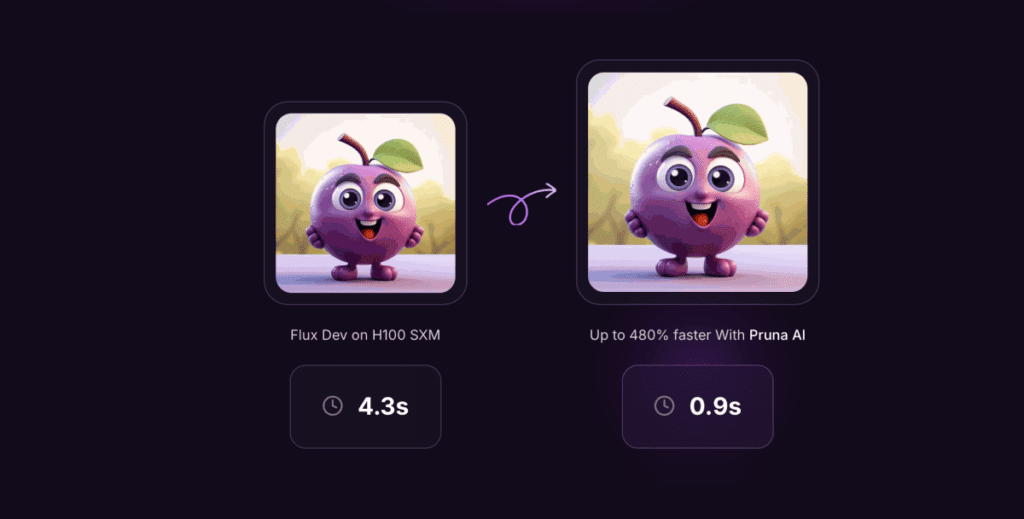

Pruna AI’s framework supports a diverse range of AI models, including but not limited to large language models, diffusion models, speech-to-text, and computer vision models. Currently, the company is particularly concentrating on optimizing image and video generation models.

Future Developments

Among the anticipated features is an upcoming compression agent, which will automate the optimization process. Users can input their model specifications, such as desired speed improvements and acceptable accuracy losses, while the compression agent determines the optimal settings. “You don’t have to do anything as a developer,” Rachwan stated regarding the technology’s utility.

Business Model and Financial Backing

Pruna AI operates on a consumption-based model, charging users akin to renting GPU resources on cloud platforms. This approach could yield significant cost savings for businesses relying on AI models for their infrastructures. For instance, the company has successfully reduced the size of a Llama model by up to eight times without significant quality loss.

Recently, Pruna AI secured $6.5 million in seed funding to bolster its endeavors, attracting investments from notable firms such as EQT Ventures, Daphni, Motier Ventures, and Kima Ventures.

Conclusion

With its innovative compression framework, Pruna AI is poised to facilitate improved efficiency and performance in AI models across various industries, making it a compelling option for organizations looking to optimize their AI capabilities.